Amazon EKS Workshop > Beginner > Autoscaling our Applications and Clusters > Scale a Cluster with CA

Scale a Cluster with CA

Deploy a Sample App

We will deploy an sample nginx application as a ReplicaSet of 1 Pod

cat <<EoF> ~/environment/cluster-autoscaler/nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-to-scaleout

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

service: nginx

app: nginx

spec:

containers:

- image: nginx

name: nginx-to-scaleout

resources:

limits:

cpu: 500m

memory: 512Mi

requests:

cpu: 500m

memory: 512Mi

EoF

kubectl apply -f ~/environment/cluster-autoscaler/nginx.yaml

kubectl get deployment/nginx-to-scaleout

Scale our ReplicaSet

Let’s scale out the replicaset to 10

kubectl scale --replicas=10 deployment/nginx-to-scaleout

Some pods will be in the Pending state, which triggers the cluster-autoscaler to scale out the EC2 fleet.

kubectl get pods -l app=nginx -o wide --watch

View the cluster-autoscaler logs

kubectl -n kube-system logs -f deployment/cluster-autoscaler

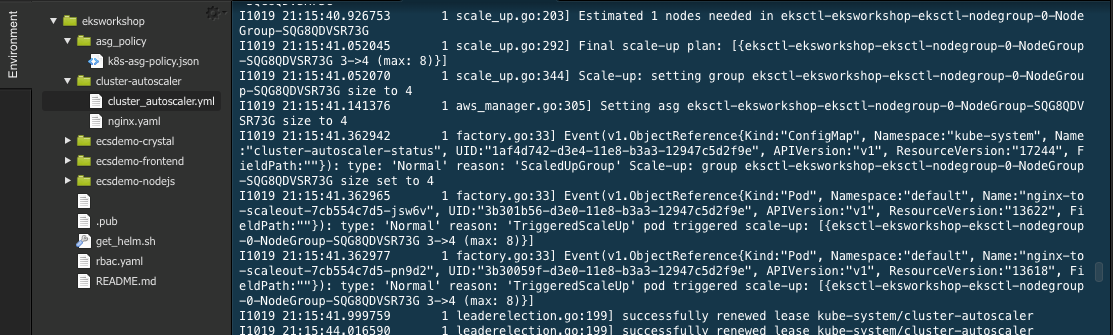

You will notice Cluster Autoscaler events similar to below

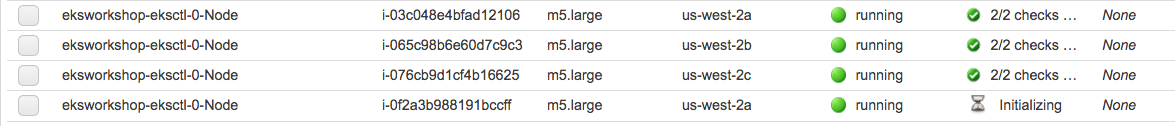

Check the EC2 AWS Management Console to confirm that the Auto Scaling groups are scaling up to meet demand. This may take a few minutes. You can also follow along with the pod deployment from the command line. You should see the pods transition from pending to running as nodes are scaled up.

or by using the kubectl

kubectl get nodes

Output